python的多线程,这是个老生常谈的话题了,网上资料也一大把。python默认的threading模块对多线程提供了支持,但实际多个threading.Thread实例无法并行运行(不是无法并发哦!)。

一句话概括答案:python的线程实质是操作系统原生的线程,而每个线程要执行python代码的话,需要获得对应代码解释器的锁GIL。一般我们运行python程序都只有一个解释器,这样不同线程需要获得同一个锁才能执行各自的代码,互斥了,于是代码就不能同时运行了。

好的,接下来我们细细讲解这句话背后的故事:

多线程并行测试

首先我们通过一些代码来测试多线程是否真的并行:

1 | import threading |

这是个测试代码,总共执行COUNT次+=1的操作,一个是单线程,一个是多线程。出来的结果,两种方式没有明显的时间上的差异:

1 | [SINGLE_THREAD] start by count pair: (0, 100000000) |

这也侧面证明了基于threading.Thread的多线程并非完全并行运行的。为了深入确认这个问题,我们需要看下python内部的线程运行的机制

线程是如何启动的

我们首先看下线程启动的过程,直接从Thread类的start方法开始:

1 | class Thread: |

start方法中,从行为上来看是这么一个逻辑:

- 首先会检查

Thread实例是否已经初始化,是否没有启动过 - 然后会把自己加入到

_limbo中 - 调用启动线程的方法

_start_new_thread,把自己的_bootstrap方法也带进去_bootstrap是python端最终开始线程任务所调用的逻辑,是在新线程里运行的!后面会慢慢看到

- 等待

self._started(一个Event实例)的信号

首先来看_limbo的作用。玩游戏玩多的同学都知道,limbo叫做“地狱边境”,如果再查下字典的话,我们可以将之简单理解为“准备态”。先记录线程为“准备态”,然后才会开始真正执行线程启动的过程。线程启动的过程是在C层进行的,我们点进_start_new_thread的定义就能看到python层是没有对应的代码的。

1 | // _threadmodule.c |

python中的_start_new_thread,对应了C层的thread_PyThread_start_new_thread,而thread_PyThread_start_new_thread传入的两个参数self跟fargs,则对应python代码里的self._bootstrap跟空的tuple。_start_new_thread的大致步骤如下:

- 解包

fargs,并检查合法性。这里由于进了空的tuple,所以暂时不需要过多分析。 - 设置

bootstate实例boot- 一个

bootstate是fargs以及对应的thread state、intepreter state以及runtime state的打包,囊括了启动新线程需要有的信息

- 一个

- 调用

PyThread_start_new_thread函数,把bootstate实例以及一个回调函数t_bootstrap传进去- 其返回值是线程的实例ID,在python端,我们也可以通过线程实例的

ident属性得到。 t_bootstrap回调函数,是需要在新启动的子线程里运行的!

- 其返回值是线程的实例ID,在python端,我们也可以通过线程实例的

PyThread_start_new_thread函数,根据不同操作系统环境有不同的定义。以windows环境为例,其定义如下:

1 | // thread_nt.h |

参数func和arg,对应的是t_bootstrap回调跟bootstate实例。为了适配windows下的_beginthreadex接口定义,t_bootstrap跟bootstate实例又打包成callobj,作为bootstrap函数(适配用)的参数,随bootstrap一起入参_beginthreadex。

这时候我们已经可以确定,python启动的新线程是操作系统的原生线程。

新线程诞生时,调用了bootstrap,在bootstrap里拆包callobj,调用func(arg),也就是t_bootstrap(boot)

1 | // _threadmodule.c |

回到t_bootstrap中,我们发现,最终t_bootstrap会取出来boot的func&args,然后调用PyObject_Call调用func(args)。回到前面去看,这个func(args)就是python端的self._bootstrap()

1 | class Thread: |

在self._bootstrap_inner()中,大致有以下步骤:

- notify

self._started,这样先前python端的start函数流程就完成了 - 把自己从准备态

_limbo中移除,并把自己加到active态里 - 执行

self.run,开始线程逻辑

这样,python中新线程启动的全貌就展现在我们面前了。除了线程的来源外,很多关于线程相关的基础问题(比如为啥不能直接执行self.run),答案也都一目了然

线程执行代码的过程

在先前一小节我们知晓了python新的线程从何而来,然而,只有通过剖析线程执行代码的过程,我们才可以明确为什么python线程不能并行运行。

一个线程执行其任务,最终还是要落实到run方法上来。首先我们通过python自带的反编译库dis来看下Thread的run函数对应的操作码(opcode),这样就通过python内部对应opcode的执行逻辑来进一步分析:

1 | class Thread: |

其中真正执行函数的一行self._target(*self._args, **self._kwargs),对应的opcodes是:

1 | 910 8 LOAD_FAST 0 (self) |

很明显,CALL_FUNCTION_EX——调用函数,就是我们需要找到的opcode。

1 | // ceval.c |

在ceval.c中,超大函数_PyEval_EvalFrameDefault就是用来解析opcode的方法,在这个函数里可以检索opcode研究对应的逻辑。找到CALL_FUNCTION_EX对应的逻辑,我们可以分析函数调用的过程,顺藤摸瓜,最终会落实到这里:

1 | // call.c |

在_PyObject_Call中,调用函数的方式最后都以通用的形式(vectorcall以及Py_TYPE(callable)->tp_call)呈现,这说明入参不同的callable,可能需要不同的caller方法来handle。基于此,我们可以通过直接debug线程类Thread的run方法(在主线程直接跑就行了),来观察线程run函数调用的过程。测试代码如下:

1 | from threading import Thread |

t.run中的self._target(*self._args, **self._kwargs)一行触发了_PyObject_Call中PyVectorcall_Call分支。一路step into下去,最终来到了_PyEval_EvalFrame函数:

1 | static inline PyObject* |

frame就是python函数调用栈上面的单位实例(类似于lua的callinfo),包含了一个函数调用的相关信息。eval_frame就是对frame保存的code(代码)实例解析并执行。解释器用的是tstate->interp,从先前线程启动的逻辑来看,在thread_PyThread_start_new_thread里,主线程就把自己的interp给到子线程了,所以不管创建多少个线程,所有线程都共用一套解释器。那解释器的eval_frame是什么呢?兜兜转转,又回到了超大函数_PyEval_EvalFrameDefault。

从_PyEval_EvalFrameDefault的main_loop这个goto记号往下,就是无限循环处理opcode了。但在switch opcode之前,有一个判断逻辑:

1 | // ceval.c |

这段代码首先会判断代码解释是否达到中断条件eval_breaker,如果达到了的话,可能会走到eval_frame_handle_pending处理中断。

1 | // ceval.c |

eval_frame_handle_pending处理了多种opcode解析中断的场景。在这里我们可以看到,不论是哪个线程跑到这里,如果遇到了gil_drop_request,就得drop_gil给到其他线程,之后再尝试take_gil,重新竞争解释器锁。

在先前讲解线程启动逻辑的时候,新线程调用的t_bootstrap函数里,有一句PyEval_AcquireThread(tstate),这里面就包含了take_gil的逻辑。我们可以看一下take_gil到底干了什么事情:

1 | // ceval_gil.h |

我们看到这段逻辑:当gil一直被占时,就会进入while循环的COND_TIMED_WAIT,等待gil->cond的信号。这个信号的通知逻辑是在drop_gil的里面的,也就是说如果另一个线程执行了drop_gil就会触发这个信号,而由于python的线程是操作系统原生线程,因此我们如果深挖COND_TIMED_WAIT内部的实现也可以看到实质上是操作系统在调度信号触发后线程的唤醒。COND_TIMED_WAIT的时长是gil->interval(也就是sys.getswitchinterval(),线程切换时间),过了这段时间还是原来线程hold住gil的话,就强制触发SET_GIL_DROP_REQUEST逻辑

1 | static inline void |

我们看到SET_GIL_DROP_REQUEST强制激活gil_drop_request跟eval_breaker,这样持有GIL的线程在EvalFrame的时候发现满足eval_breaker,就会走eval_frame_handle_pending的逻辑,里面再判断有gil_drop_request之后,就调用drop_gil把解释器锁释放出来。这样,另一个线程在执行SET_GIL_DROP_REQUEST之后的某次COND_TIMED_WAIT时候,就有可能提前被signal到,之后又发现gil没有被locked,于是就能够继续下面的逻辑,持有GIL了。最后,另一个线程拿到了代码的执行权,而原先丢掉GIL的线程,在eval_frame_handle_pending再次调用take_gil,反过来走在了竞争代码执行权的路上。循环往复,如是而已。

总结

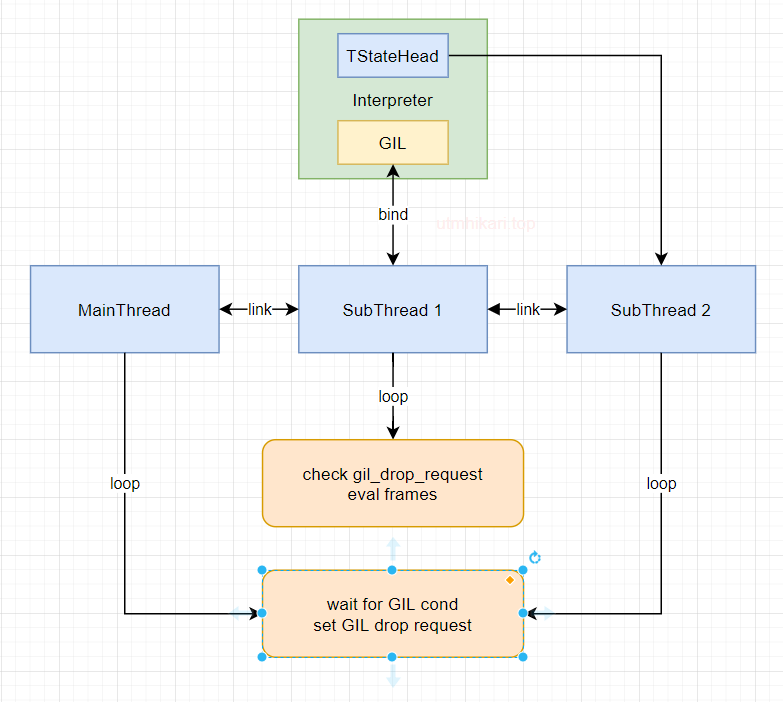

通过对线程启动机制、代码运行机制以及基于GIL的线程调度机制的剖析,我们可以“一图流”,解释“python多线程为什么不能并行”这个问题: